Extracting deals from emails sounds straightforward until you attempt it at scale. It quickly becomes a deceptively hard NLP problem: unstructured text, image-only creatives, ambiguous marketing language, and no standardized format.

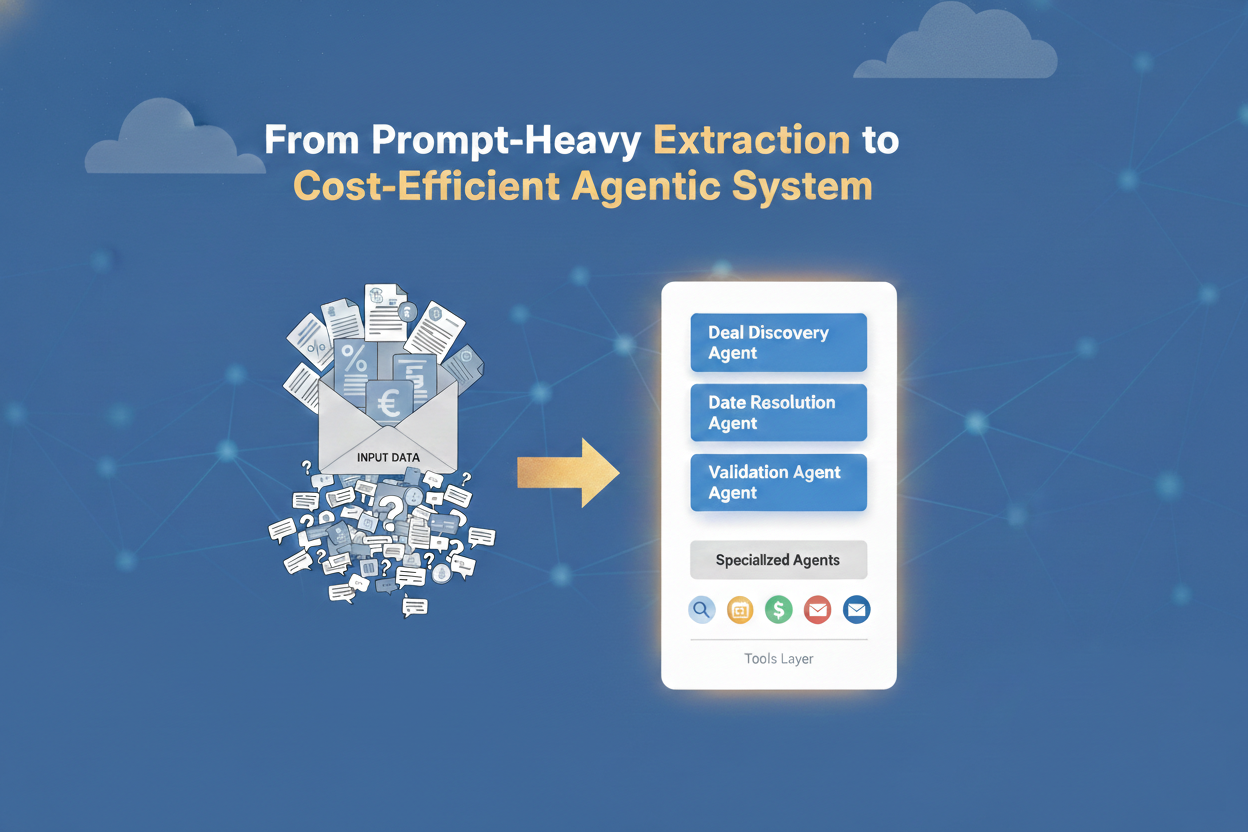

Solving it required multiple architectural shifts. What started as prompt-heavy extraction ultimately evolved into a cost-efficient agent-based system.

I designed this production-grade pipeline for Offerivo to reliably identify deals, coupons, and expiration dates while keeping inference costs under control.

Revisiting the Problem

My first attempt dates back to 2019, when I tried solving this with a hand-written parser. It quickly became clear that brittle rules do not survive the variability of real-world email.

Large language models made this problem worth revisiting. I rebuilt the system from scratch, experimenting with multiple pipelines, models, and workflow patterns to achieve reliable extraction without driving inference costs too high.

One lesson emerged quickly: smaller models are far more capable than most teams assume, especially when given tightly scoped responsibilities. That realization shaped every iteration that followed.

Architecture Evolution

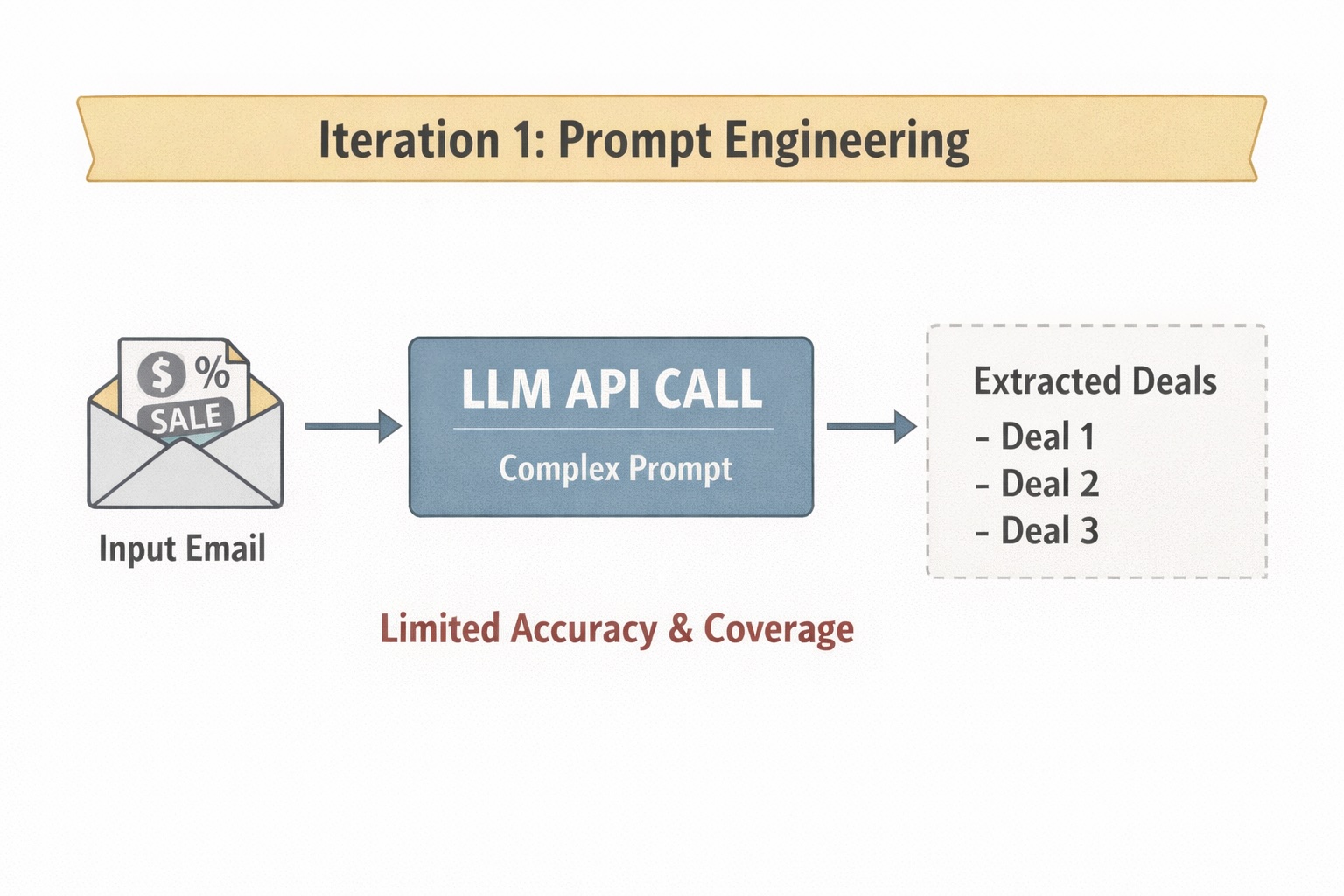

Iteration 1: Prompt Engineering

I started with a single LLM call backed by heavy prompt engineering to extract deals directly from each email.

This worked for simple, clearly structured promotions but broke down quickly in real-world scenarios. The model frequently surfaced credit card offers as deals and struggled when multiple products with competing discounts appeared in the same email.

The limitation became obvious: prompt-heavy systems are brittle. As variability increased, reliability dropped.

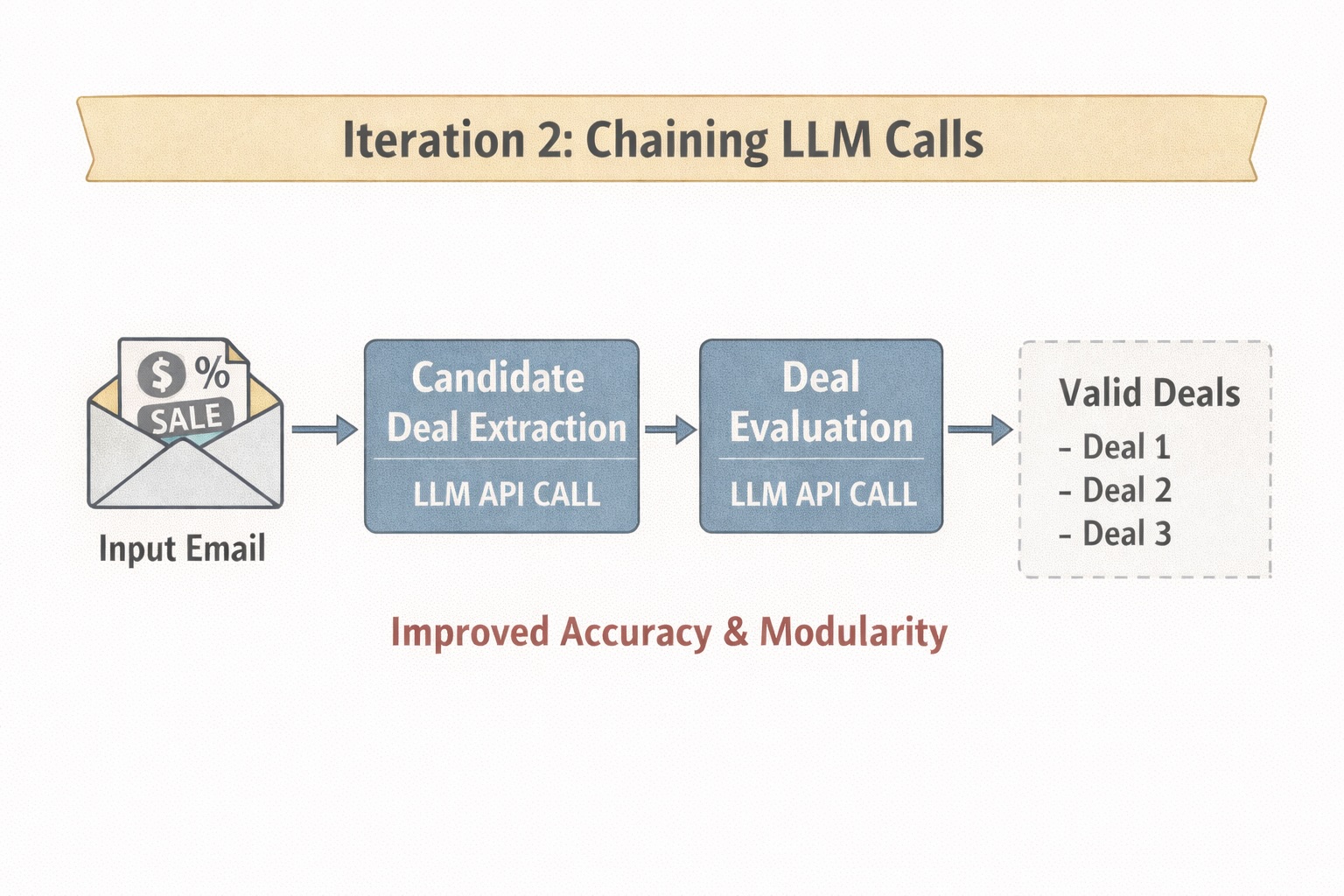

Iteration 2: Chaining LLM Calls

I restructured the pipeline into two sequential steps: first extract candidate deals, then evaluate whether they were truly offers worth surfacing.

This separation improved precision and made the system easier to reason about. It also marked my first shift from prompt design toward workflow design.

However, the improvement exposed a gap I had overlooked. A meaningful portion of promotional emails contained little to no machine-readable text. Many were large marketing images with the actual deal embedded inside the creative.

Solving extraction was no longer just an NLP problem. It had become an input problem.

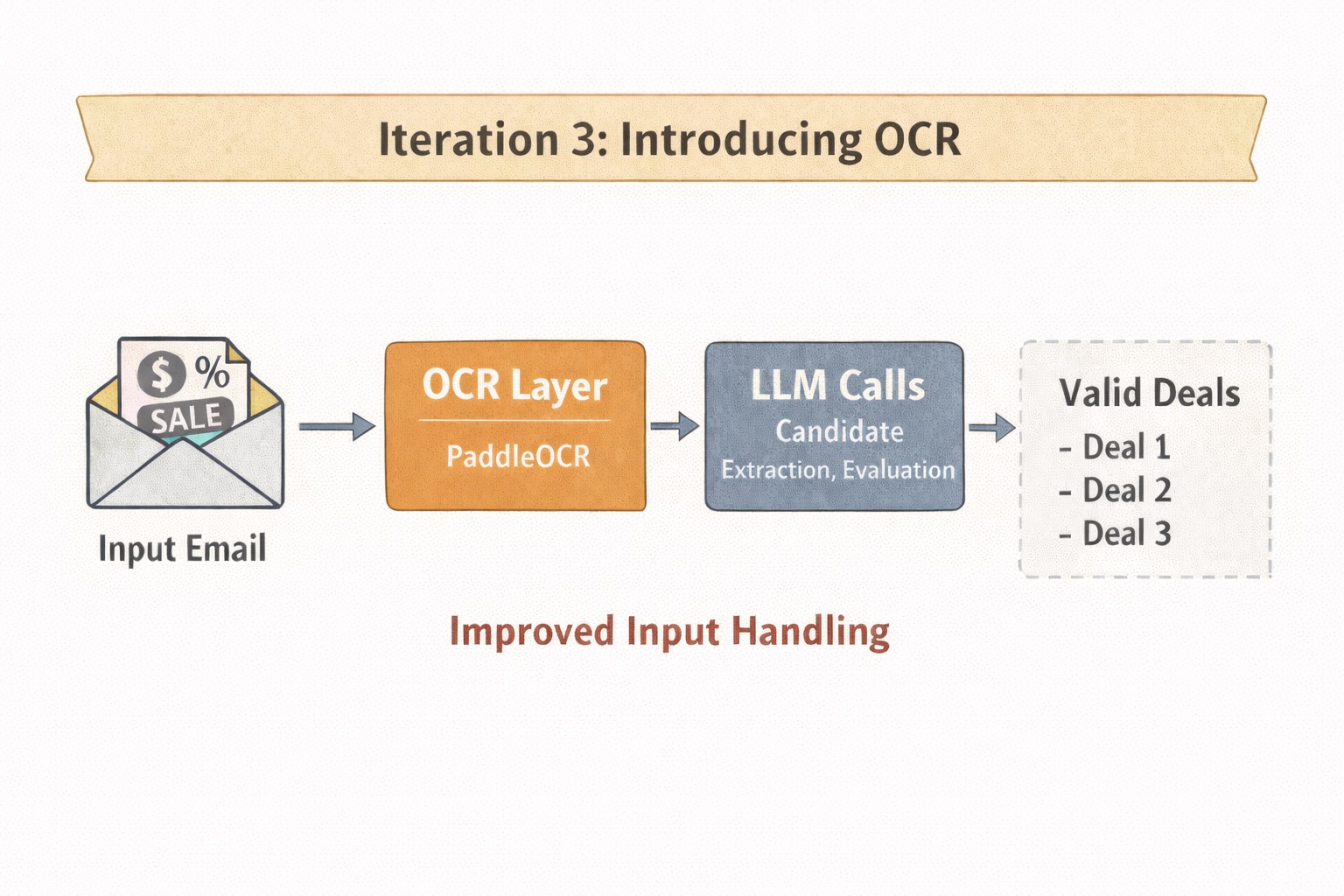

Iteration 3: Introducing OCR

At this point, the constraint was no longer the model but the input itself. Many promotional emails contained little to no machine-readable text, with the actual deals embedded inside large marketing images.

To address this, I introduced an OCR layer into the pipeline.

Several managed OCR services were available, but their pricing made them difficult to justify at scale. After evaluating multiple options, the choice narrowed to EasyOCR and PaddleOCR. PaddleOCR ultimately stood out, significantly outperforming the alternatives in accuracy while remaining cost-effective.

Running OCR inside AWS Lambda presented its own challenges due to runtime and memory constraints. Packaging PaddleOCR into a container image and allocating sufficient memory proved to be a practical solution, allowing the pipeline to remain fully serverless.

This change materially improved deal detection and pushed the system closer to production reliability. However, logical inconsistencies still surfaced, particularly in how extracted information was interpreted across emails. Real-world data rarely cooperates with clean pipelines, and fixing extraction alone was not enough.

The system needed stronger reasoning, not just better text capture. That realization led to the next architectural shift: specialized agents.

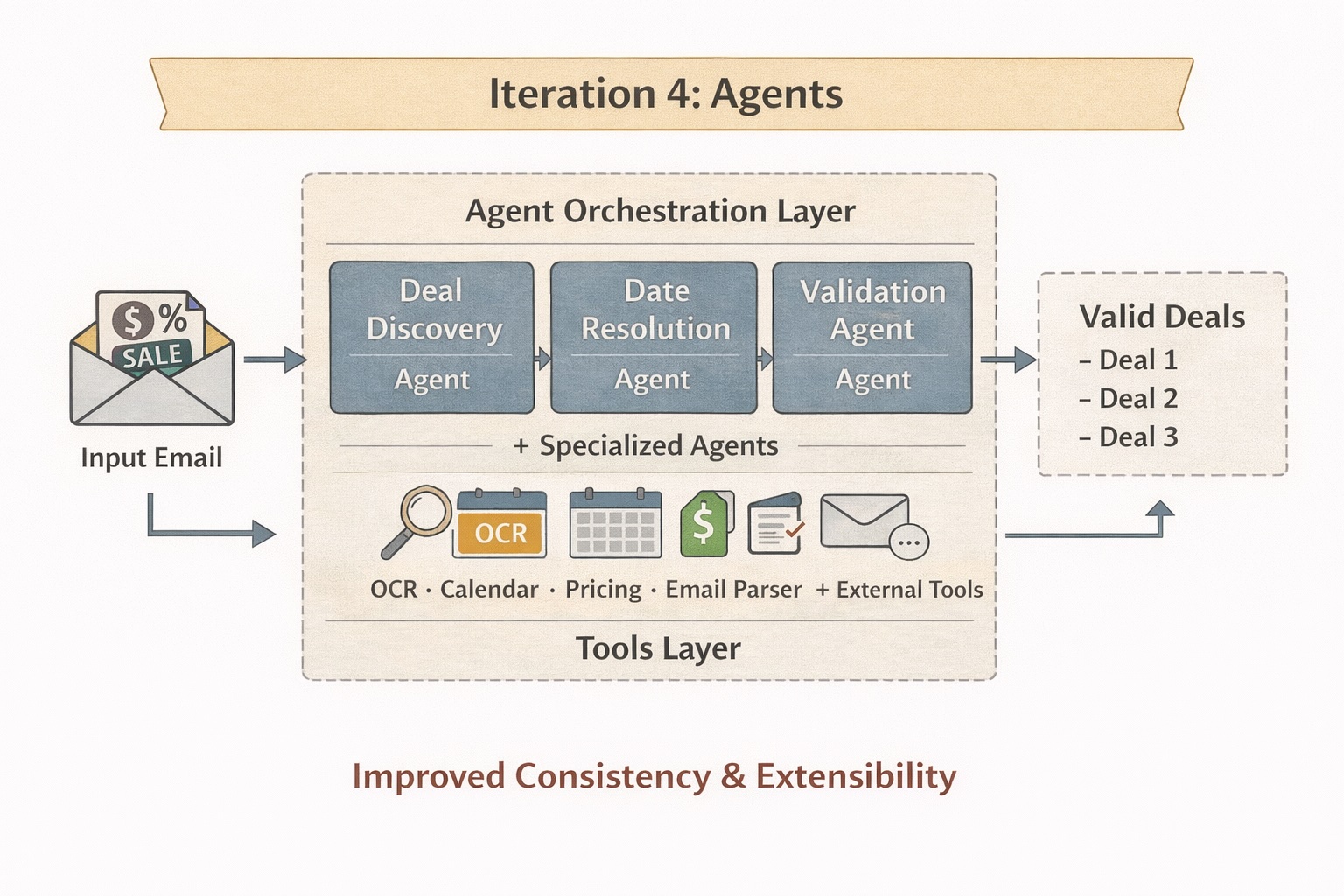

Iteration 4: Agents

By this stage, the core building blocks were in place, but the pipeline still lacked consistent reasoning. The system needed components that could focus on narrowly defined responsibilities.

SLMs perform exceptionally well when constrained to tightly scoped tasks, so I re-architected the pipeline around specialized agents. Each agent was responsible for a specific function such as deal discovery, date resolution, or validation, with access to tools like OCR and calendar lookups when needed. Simpler labeling tasks were routed to a nano model to keep inference costs low.

This marked the architectural turning point of the system: a shift from sequential processing to specialized agents with clearly bounded responsibilities.

The agent-based approach significantly improved reliability while preserving cost efficiency. More importantly, it made the system easier to extend. Adding new capabilities no longer required redesigning the pipeline, only introducing a new agent with a well-defined role.

Designing this system reinforced principles that now shapes how I approach production AI.

Lessons from Building a Cost-Efficient Agentic System

Building this pipeline shifted my perspective on production AI:

- System Design > Prompt Cleverness: Workflow architecture consistently outperforms increasingly complex prompts.

- Preprocessing is Foundational: Real-world inputs are messy, making preprocessing just as critical as model selection.

- Small Models, Scoped Well, Win: Specialized agents built on smaller models often outperform larger generalist systems while remaining far more cost-efficient.

- Reliability Emerges from Specialization: Accuracy improves when models are given narrowly defined responsibilities rather than broad mandates.

- Cost is an Architectural Constraint: Cost isn’t a final optimization step; it’s a foundational architectural constraint.

Effective AI systems aren’t born from a single powerful model. They emerge from the thoughtful orchestration of components that each do one job exceptionally well.

This progression from prompts to workflows to agents has proven far more durable than relying on model capability alone.